Picking a Kubernetes Policy Engine Without Losing Your Mind

Enforcing Kubernetes policies sounds simple… until you have to do it in production at scale. Block privileged pods? Sure. Inject sidecars automatically? Easy, right?

But once you're managing 1,000+ pods per hour across multiple clusters, that “simple” decision becomes one of the largest sources of platform toil. In 2026, the policy landscape has matured but the best engine still depends on your specific "pain tolerance" for admission latency, operational overhead, DSL complexity and governance sprawl.

Your option as of this writing:

| Engine | Language | Work Mode | Best For |

| Native (VAP/ MAP) | CEL | In-process (API server) | Low-latency built in guardrails |

| Kyverno | YAML + CEL | Admission webhook | K8s native automation + mutation |

| OPA Gatekeeper | Rego | Admission webhook | Enterprise compliance + cross stack parity |

| Kubewarden | Wasm (Go/Rust/TS) | Sandboxed webhook | Developer environment + OCI distribution |

Native Policies: ValidatingAdmissionPolicy and MutatingAdministionPolicy

Since ValidatingAdmissionPolicy reached GA in Kubernetes 1.30, native policies are now the default first stop for any sane platform team.

-

The Pro: All about Speed. Native policies run directly inside the

kube-apiserver. No network hop. No TLS handshake between the API and a webhook. Latency is often < 2ms. -

The Tradeoff: "Peephole" logic. Native policies are strictly isolated. They only see the object being admitted. If you need to check a Vault secret, query an external API, or look at a different Namespace, native won't cut it.

Use for 80% of baseline guardrails. If a rule can be written in Common Expression Language (CEL), write it here first for instance privileged pods, hostPath bans, required labels, ...

Kyverno: The Mutation King

Kyverno remains the favorite for teams that want policy authoring to feel like writing a standard K8s manifest.

-

The Pro: Advanced Generation. Kyverno excels where native policies fail at recourse generation. Ever wanted to automatically spin up a

SecretorNetworkPolicywhenever a newNamespaceis created? Kyverno does this natively without a custom controller.

Kyverno has increasingly adopted CEL-based rules, allowing it to behave as a high-level orchestrator for native policies while still providing powerful background scanning. Kyverno is approachable, probably the key factor of its adoption so quickly.

The best choice for platform teams automating the "boring stuff" without learning a new language or go full custom k8s operator.

OPA Gatekeeper: The Enterprise Heavyweight.

OPA remains the choice for organizations that view policy as a global architectural layer.

-

The Pro: Cross platform parity. You can use the same Rego logic to validate a terraform plan, AWS IAM governance, and a k8s deployment.

-

The Con: The "policy priest" problem. Rego is powerfull but steep. Even in 2026 - with AI assisted code generation, debug Rego at 2am is still not fun. You often end up with one guru who understands the logic, creating a massive bottleneck.

Essential only if governance must extend beyond Kubernetes world.

Kubewarden: Policies as Software Artifacts

Kubewarden has gained traction by treating policies like standard software components, and be honest we all like writing code.

-

The Pro: General purpose languages. With strong SDK support, you can write policies in Typescript, Go, Rust or any Wasm compatible language. No need to learn yet another DSL.

Principle advantage the OCI native delivery. Policies are compiled to WebAssembly and shipped as OCI images. They are versioned, can be unit tested and scanned just like any application containers.

Best for any teams that want "policy as code" to actually mean code.

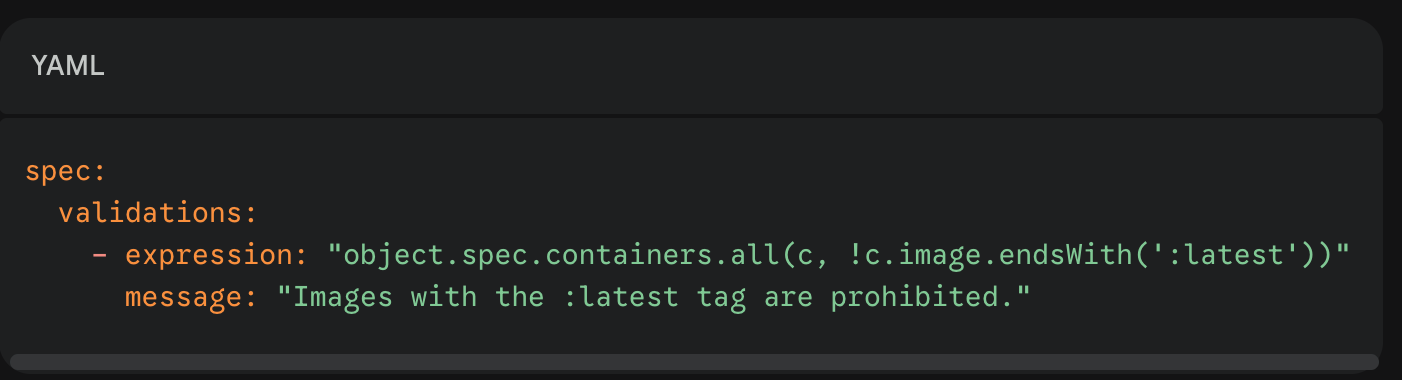

Deep Dive: Three Ways to block :latest tags

Same guardrail, different feel:

Native CEL

OPA (Rego) Kubewarden (TypeScript)

Kubewarden (TypeScript)

Performance at scale: The Policy Tax

Performance at scale: The Policy Tax

Admission is synchronous. If your engine is slow, your cluster feels sluggish. During a "rollout storm" (500 pods deploying simultaneously), the overhead adds up. From an order of magnitude, you can expect:

-

Native VAP: Near zero overhead (~1-2 ms)

-

Kubewarden: ~10–25ms (compiled Wasm execution)

-

Kyverno/ Gatekeeper: ~40–100ms (Webhook overhead + network hop + interpreted logic).

Most engines work fine at 5 policies, most teams break at 200. Always benchmark your control plane throughput before commiting to a webhook heavy strategy.

But don't take our words for granted, verify it yourself.

To see this policy tax in your own environment, we recommand using kube-burner. This tool allows you to simulate easily high concurrency admission requestes and measure exactly how much latency your policies add to the kube-apiserver.

The metric to watch is apiserver_admission_step_admission_duration_seconds_bucket. This prometheus metric breaks down time spent in validating_admission_policy (native) vs. admission_webhook (external).

If your P99 admission latency exceeds 100ms during this test, your developers will eventually face the "Context Deadline Exceeded" errors, stalled rollouts, ...

The 2 AM Sanity Checklist

Before picking an approach to implement your policy strategy, ask yourself:

-

Can I write it natively? if yes use VAP/MAP.

-

Do I need to fix/create resources on the fly? Then use Kyverno.

-

Do my team hate YAML and Rego? Use Kubewarden.

-

Is this policy out reach my kubernetes ? Use OPA Gatekeeper

But more important, stop chasing the perfect policy engine. Pick the one your team can debug at 2 AM when a misconfigured policy is blocking a critical production hotfix.

— — —

https://open-policy-agent.github.io/gatekeeper/website/

https://github.com/kube-burner/kube-burner

Never miss an update.

Subscribe for spam-free updates and articles.

.png)